AGs and WSFC OS Rolling Upgrades: What Works and What Doesn’t

It’s been a crazy summer so far. I haven’t had much time to blog but an interesting scenario came up recently that I wanted to share with everyone. I have also missed writing technical posts, and this is a good one to scratch the itch.

In Windows Server 2016, Microsoft added the ability to do a rolling OS upgrade with a Windows Server Failover Cluster (WSFC). This is Microsoft’s official documentation. The SQL Server side of things is not well documented, especially for Always On Availability Groups (AGs), so I did a few tests. The results are fairly interesting. All start out with SQL Server 2012 SP4. The goal is to get to Windows Server 2016 and some version of SQL Server, whether it is the same or different.

A few things you should know

- A cluster OS rolling upgrade works/is supported N-1, so you can upgrade Windows Server 2012 R2 to 2016 or Windows Server 2016 to 2019, but not Windows Server 2012 R2 to 2019.

- Running validation will fail when you add a Windows Server 2016 node to a 2012 R2 WSFC.

- I strongly recommend only adding the upper version nodes with that version. I saw some weird things when I tried to do it the other way.

One of the reasons this upgrade method is important is that especially in virtualized or public cloud-based architectures, you’re no longer tied to hardware for OS uprgades so how do you upgrade clustered configurations of SQL Server?

Strong Warning

The configuration for a cluster rolling upgrade allows for mixed Windows Server versions to coexist in the same WSFC. This is NOT a deployment method. It is an upgrade method. DO NOT use this for normal use. Unfortunately, Microsoft did not put a time limit on how long you can run in this condition, so you could be stupid and do something like have a mixed Windows Server 2012 R2/2016 WSFC. Fire, ready, aim. The WSFC knows about this and you’ll see a warning with an Event ID of 1548.

Also, what is shown below when adding replicas to lower versions of SQL Server is also NOT a normal deployment method. It’s an upgrade tactic only. Don’t be dumb – especially since it’s a one way street once the AG fails over to the newer version.

If it was me, I would do all of this in one weekend if I could. Circumstances may not allow that, but don’t drag this on very long.

One very good reason to not stay this way is that when dealing with the cluster functional level, unless all nodes are at the higher version, you’re stuck at the older functionality/behaviors. A good example of a benefit you could not take advantage of is cloud witness in Windows Server 2016 if you are at a functional level of 8. 9 is Windows Server 2016 and 10 is Windows Server 2019.

The Tests

Detailed steps are not here, just the overall process to see what does/does not work. For a real production move, there are things I would add to this list but that isn’t what I was going for here. I also did not necessarily use the latest/greatest updates. I was testing process here so it was pretty quick, down, and dirty. There are also many more variants. You can try those yourself.

Test 1

2 x SQL Server 2012 SP4/Windows Server 2012 R2 nodes

2 x SQL Server 2012 SP4/Windows Server 2016 nodes

High level process:

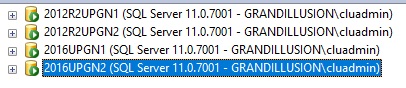

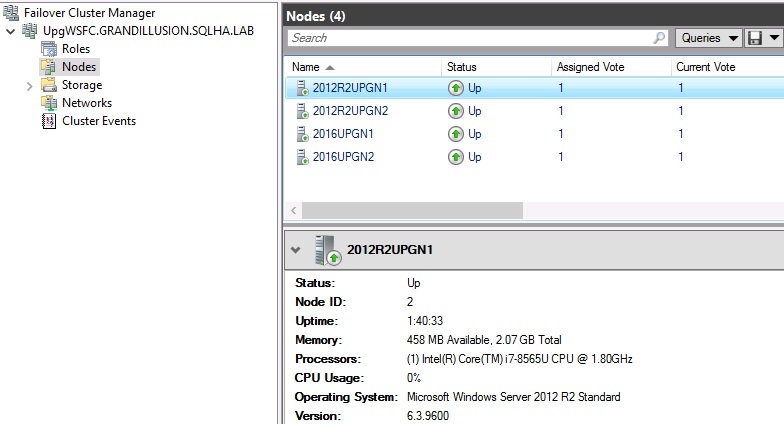

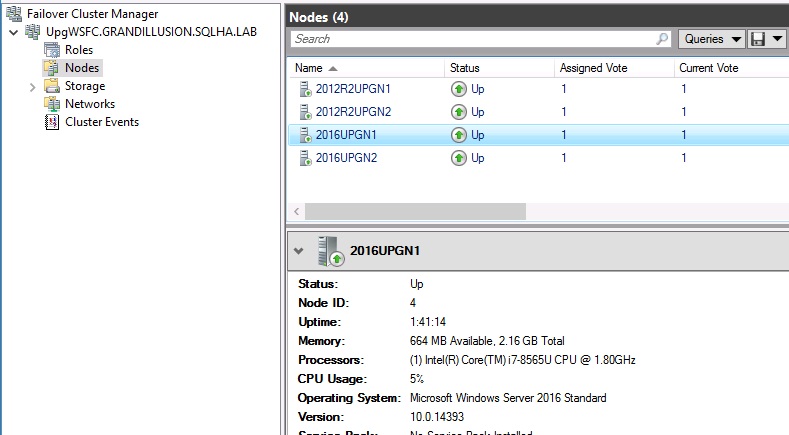

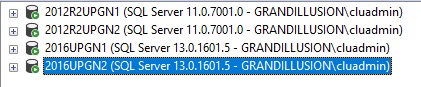

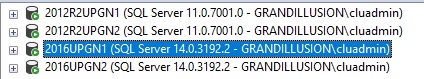

- Add the Windows Server 2016 nodes to the 2012 R2 WSFC. The WSFC is mixed, and looks similar to the screen shots below.

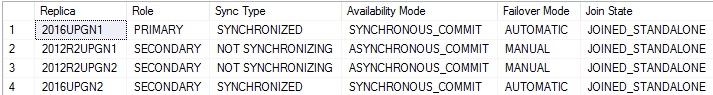

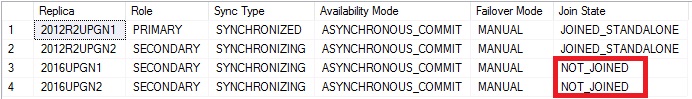

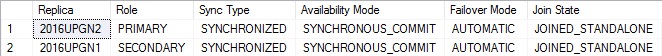

- I joined the instances on the Windows Server 2016 nodes as replicas to UpgAG. This query output shows that everything has joined and seemingly looks okay. Or is it?

- I then set 2012R2UPGN1 and 2016UPGN1 to synchronous data movement since I’m trying to go to WS2016. That works fine.

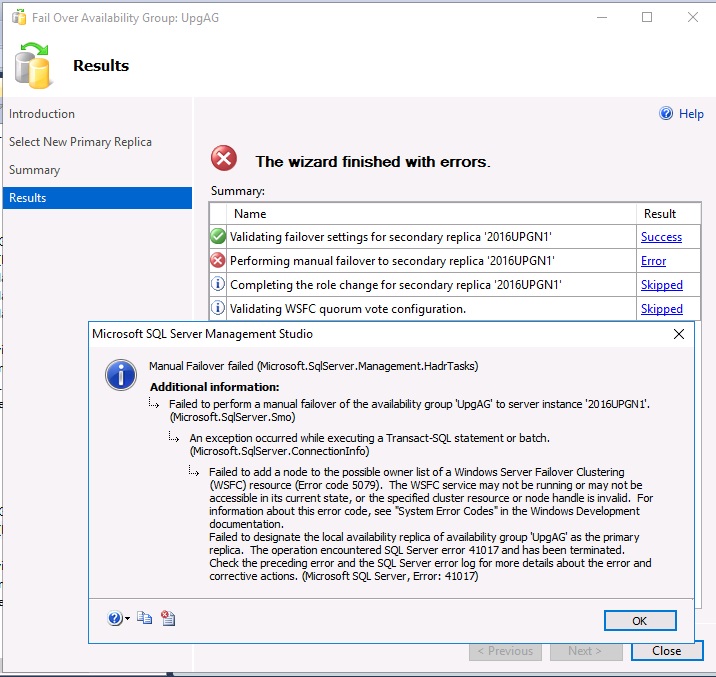

- I should be able to manually initiate a failover from 2012R2UPGN1 to 2016UPGN1 right? Wrong. For whatever reason, SQL Server 2012 in this configuration can’t update possible owners on the AG resource at the WSFC level.

Result – Does not work. I tried to get around this by manually dealing with possible owners, but the configuration was not having it. It looks like if you want to do a rolling cluster upgrade and stay on all SQL Server 2012, you’re out of luck.

Test 2

2 x SQL Server 2012 SP4/Windows Server 2012 R2 nodes

2 x SQL Server 2012 SP4/Windows Server 2016 nodes

High level process:

- Add the Windows Server 2016 nodes to the 2012 R2 WSFC.

- Using the configuration from Test 1 that bombed, perform an in-place upgrade to SQL Server to 2016 on the two Windows Server 2016 nodes. Since everything at SQL Server 2012 failed, I wanted to see if this would make a difference.

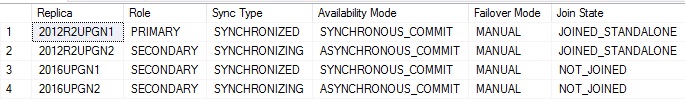

- Set 2012R2UPGN1 and 2016UPGN1 to synchronous.

- I failed UpgAG over to 2016UPGN1 from 2012R2UPGN1. This was successful, and the SQL Server 2016 replicas can now synchronize, but they cannot with the 2012 ones. That makes sense given 2012 is downlevel and upon failing over, the database was upgraded to 2016.

- Remove the SQL Server 2012 replicas from the AG.

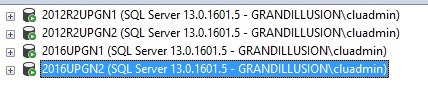

- Evict the Windows Server 2012 R2 nodes from the WSFC.

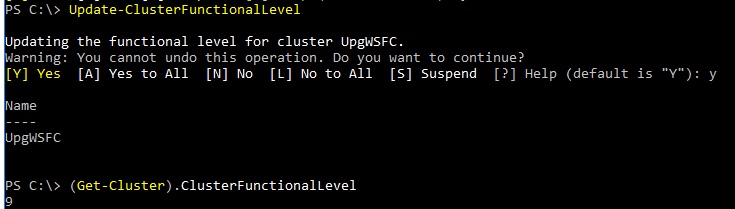

- Upgrade the WSFC functional level.

- Party.

Result – Success! This mixed combo worked and I was left with both Windows Server and SQL Server upgraded with minimal downtime and little fuss.

Test 3

2 x SQL Server 2012 SP4/Windows Server 2012 R2 nodes

2 x SQL Server 2012 SP4/Windows Server 2016 nodes

High level process:

- Add the Windows Server 2016 nodes to the 2012 R2 WSFC

- Join the instances on the Windows Server 2016 nodes as replicas to UpgAG.

- This time upgrade all four instances in place to SQL Server 2016.

- Set both 2012R2UPGN1 and 2016UPGN1 to synchronous.

- Manually fail UpgAG from 2012R2UPGN1 to 2016UPGN1. This succeeds.

- Remove the 2012 replicas from the AG.

- Evict the 2012 R2 nodes from the WSFC.

- Upgrade the WSFC functional level.

- Drink a beverage of your choice.

Result -Similar to Step 2, this worked with none of the problems of the all SQL Server 2012 configuration.

Test 4

2 x SQL Server 2012 SP4/Windows Server 2012 R2 nodes

2 x SQL Server 2017 CU 15 + GDR/Windows Server 2016 nodes

High level process:

- Add the Windows Server 2016 nodes to the 2012 R2 WSFC

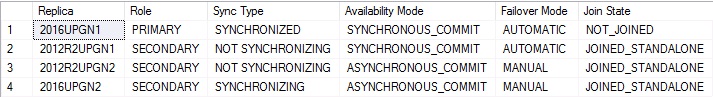

- When joining the SQL Server 2017 instances on the Windows Server 2016 nodes as replicas to UpgAG, there is a different behavior. Note the join state highlighted. That is not good. Can that be changed?

- Setting things to synchronous does not seem to help.

- Changing ownership of UpgAG to 2016UPGN1 does not move the needle much … but it moves it a little. Note that 2016UPGN2 now has a status of JOINED_STANDALONE.

- Removing the 2012 replicas from the AG has no change on 2016UPGN1.

- Failing UpgAG to 2016UPGN2 however gets everthing where it should be for a join state.

- Evict the 2012 R2 nodes from the WSFC.

- Upgrade the WSFC functional level.

Result – Success! This one was not straightforward, and I do not know why when you join a SQL Server 2017 replica to a lower level AG in this configuration it gives a status of NOT_JOINED. Clearly that did not happen in Test 2 or Test 3. That is a question for the dev team and another day.

Final Thoughts

While three of the configurations worked in the end, one gave me a bit of heartburn, and the fourth just didn’t work. As always, you should plan upgrades and test them before doing them on your production boxes. If you’re looking for help on upgrades or anything else SQL Server or infrastructure-related, reach out to us. We’d love to help you get to your destination!

Pingback: Rolling Windows Upgrades with AGs + WSFC – Curated SQL

This is EXACTLY what I needed Allan!! Incredibly timely blog post – I’m glad you had that itch that needed scratching! 😀

Hi Allan,

i am trying to follow your approach,

to give you some intro

Below nodes are part of same WSFC (windows 2016 and SQL server 2019)

SQL-1

SQL-2

Trying to add 2 new Nodes to the same WSFC (windows 2019 and SQL server 2019)

SQL-3

SQL-4

can create an AG and databases are in Sync .

SQL-1 and SQL-3 are part of same subnet range

SQL-2 and SQL-4 are part of same subnet range.

i can failover between sql-2 and sql-1 , but cannot failover to newly created SQL-3 and SQL-4. gives IP conflict and in use, even though they are not used.

Getting below error :

Failed to bring availability group ‘AWS_AAG2’ online. The operation timed out. If this is a Windows Server Failover Clustering (WSFC) availability group, verify that the local WSFC node is online. Then verify that the availability group resource exists in the WSFC cluster. If the problem persists, you might need to drop the availability group and create it again. (Microsoft SQL Server, Error: 41131)

I would need to understand your networking to figure things out. If you’re using AWS, its networking works a certain way.

None of this has anything to do with patching, and hopefully you tested all of this before you went live.

this N-1 is interesting, i have Windows/sql 2012 R2 SP4, and i want to do cluster OS+SQL rolling upgrade. not sure based on your article I would be able to do or not as apparently based on you blog it doesn’t qualify for N-1.

what do you recon @Allan?

N-1 means Windows Server 2016 in your case.

So for test 3 do you think it would have work more smoothly if you used SQL Server 2106 sp1/sp2 instead of SQL Server 2017?

I’m currently in the process of figuring out how to move from SQL Server 2012 SP4\Windows Server 2012 R2 to SQL Server 2019\Windows Server 2019 and of course when I tried to add the 2019 node it wouldn’t let me. So I went to forums and was told only a 2016 node can be added to a 2012 R2 Cluster. I had assumed they were talking about SQL Server AG’s as well but judging from your test it seems they only meant the WSFC. And while you were able to get 2017 to join and synchronize do you think it would have been smoother if it were SQL Server 2016 SP2?

That’s correct. The OS has always been N-1.

SQL Server tests a bunch of scenarios. If it’s in their supported matrix of upgrades, version should be in theory relative.