Patching Clustered SQL Server Instances with SQL Server 2008 and SQL Server 2008 R2

One of the things you will eventually have to do to an instance of SQL Server is apply one or more of the following: a community update (CU), service pack (SP), or hotfix. I recently wrote a whitepaper entitled “Applying Updates to a Clustered Instance of SQL Server 2008 or SQL Server 2008 R2” which can be downloaded from this link. It has all the background information you need, and includes how to do things like check for reboots, script thge installs, etc. The scripts for the whitepaper can be downloaded here.

That whitepaper describes the process on how to approach patching clustered instances of SQL Server and patching, but in its examples, covers two more difficult examples because things can be complex and those are not described anywhere else (hence me wanting to write the paper). Read the whitepaper for the background information, but I thought in this blog post I would present the most simple case: one instance on a two-node cluster. All changes will be denoted in red italics.

In this example, to start, the instance is currently owned by Node A and is at SQL Server 2008 SP1 and you are looking to update the instance to SQL Server 2008 Sp2. Both nodes can potentially own the instance at this point.

|

|

Node A |

Node B |

||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

|

Instance |

Yes | Yes | SP1 | No | Yes |

SP1 |

Table 1. Initial cluster state

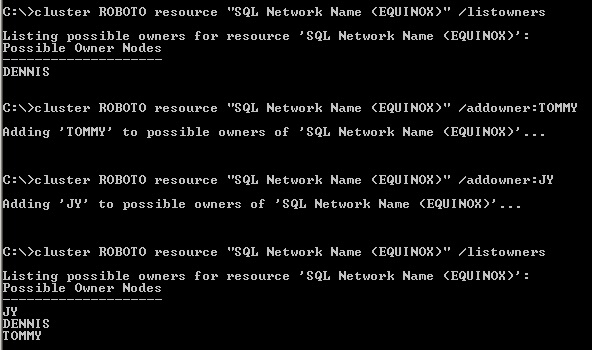

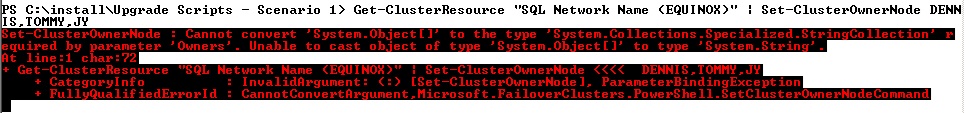

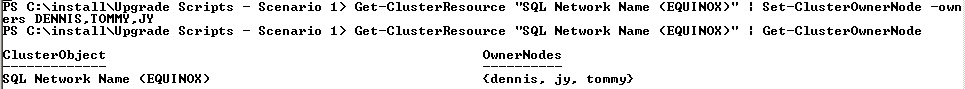

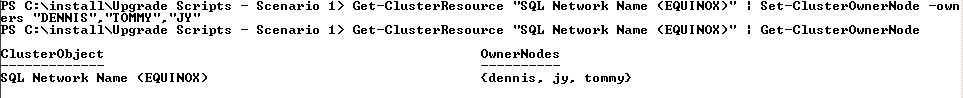

To start the process, you will first have to remove the node which does not own the instance as a possible owner of the network name resource for the instance. This process and why you need to do it is described in the whitepaper.

| Node A |

Node B |

|||||

| Owner | Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | Yes | Yes | SP1 | No | No |

SP1 |

Table 2. Cluster state after removing Node B as a possible owner

Install SP2 on the node which is not currently a possible owner of the instance. Once that it is done, it will look like Table 3.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | Yes | Yes | SP1 | No | No |

SP2 |

Table 3. Cluster state after patching the instance on Node B

Add Node B back as a possible owner of the network name resource. The cluster will now look like Table 4.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | Yes | Yes | SP1 | No | Yes |

SP2 |

Table 4. Cluster state after adding Node B back as a possible owner

You now need to fail the instance over to Node B to start the upgrade of the instance itself as shown in Table 5. This will be the first time you would have to schedule an outage. You should do this in a controlled fashion as to minimize the impact to end users and applications.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | No | Yes | SP1 | Yes | Yes |

SP2 |

Table 5. Failing the instance over to Node B

The instance itself and the databases that were online will now be upgraded. The time it takes to do this will be fairly short. Technically you could allow connections after the failover, but you may want to wait until you are able to complete the patching of Node A and test full failover. That is up to you.

As with Node B, remove Node A as a possible owner of the instance’s network name as shown in Table 6.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | No | No | SP1 | Yes | Yes |

SP2 |

Table 6. Removing Node A as a possible owner of the instance

Install SP2 on Node A as shown in Table 7.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | No | No | SP2 | Yes | Yes |

SP2 |

Table 7. Installing SP2 on Node A

Once Node A has been patched, add it back as a possible owner as shown in Table 8.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | No | Yes | SP2 | Yes | Yes |

SP2 |

Table 8. Adding Node A back as a possible owner of the instance

Finally, you should fail the instance back to Node A as shown in Table 8 not because you want it to “live” there (your instance should be able to run anywhere in the cluster assuming you did capacity planning correctly), but because you want to ensure that there will be no problems after the installations are done and that the instance can run on all nodes. If you have allowed connections into the instance already, you will have to schedule an outage or do it during an already scheduled outage.

| Node A |

Node B |

|||||

|

Owner |

Possible Owner | Version | Owner | Possible Owner |

Version |

|

| Instance | Yes | Yes | SP2 | No | Yes |

SP2 |

Table 9. Final state

Figure 1. Instance Configuration dialog in SQL Server 2008 R2 Setup

Figure 1. Instance Configuration dialog in SQL Server 2008 R2 Setup Figure 2. Network Name for the default instance EQUINOX in PowerShell

Figure 2. Network Name for the default instance EQUINOX in PowerShell Figure 3. Network Name for the default instance EQUINOX at the Group Level in Failover Cluster Manager

Figure 3. Network Name for the default instance EQUINOX at the Group Level in Failover Cluster Manager Figure 4. Network Name details for the default instance EQUINOX in Failover Cluster Manager

Figure 4. Network Name details for the default instance EQUINOX in Failover Cluster Manager Figure 5. The EQUINOX VCO in Active Directory

Figure 5. The EQUINOX VCO in Active Directory

Figure 8. Properties for the SQL Server resource for a default instance

Figure 8. Properties for the SQL Server resource for a default instance

Figure 10. Named clustered instance in Failover Cluster Manager

Figure 10. Named clustered instance in Failover Cluster Manager Figure 11. Properties for the SQL Server resource for a named instance

Figure 11. Properties for the SQL Server resource for a named instance Figure 12. Properties for the SQL Server Agent resource for a named instance

Figure 12. Properties for the SQL Server Agent resource for a named instance Figure 13. Instance IDs for multiple clustered instances

Figure 13. Instance IDs for multiple clustered instances