A Lesson for IT – Don’t Be Southwest Airlines

Whether you live in the United States or not, by now you have probably heard about what is going on (or not, as the case may be) with Southwest Airlines (SWA). I was away for the holiday weekend visiting a friend and on the way back, even the employees of the other airline I was flying were talking about it and how the systems had basically melted down. As I say often, you want to be reading the news, not making the headlines and certainly not drawing the attention and ire of the US Department of Transportation (See their Twitter posts starting around December 26. Some examples: this thread, this thread, this Tweet, and this thread.).

What Happened?

From the outside looking in as someone who is a business continuity expert, this seems like it was a perfect storm of bad things converging at the same time. In the past week they have cancelled over 5,000 flights leaving passengers stranded, angry, and often in bad scenarios. Why are/were things so bad? Three quick points:

- Mother Nature and the storms/weather that hit the US around the holiday and affected some of their busiest airports.

- Not having a hub and spoke model for planes and people to be able to easily move the chess pieces around for things like weather events. This speaks to process and configuration as we think about it in IT.

- Fragile, legacy IT systems still involved in day-to-day operations. In the case of SWA, there are systems that deals with flight and crew management. This problem is our good friend technical debt.

I feel bad for everyone involved – the customers affected, the employees who have to deal with the situation (especially the frontline ones who will feel the brunt of the customer wrath), and everyone in between.

Let me be clear: I don’t think SWA sought out to ruin people’s travel around a holiday nor was it their goal to draw the attention of the US Government. This is the reality of business and IT – things happen, often at inconvenient times. People are affected by said event. Hilarity does not ensue.

Let’s Talk Technical Debt

You’re never a hero proverbially saving $1 now when it will cost you $10 to deal with whatever that problem is later. Kicking the can down the road is a flawed, dangerous IT strategy. I’ve addressed tech debt and other related issues before (selected posts: “Technical Debt – The (Not So) Silent Crisis“, “Outages In An Increasingly Connected World“, “Security Is An Availability Problem“, and “Another Day, Another Outage“) so if you want to know the basics in more detail, read those.

SWA did upgrade some systems a few years back to give “the carrier more flexibility to improve the Customer Experience and enhance revenue performance.” Clearly the “Customer Experience” has been top notch over the past week. When you’re not flying and have to reimburse customers and figure things out, you LOSE money, not enhance revenue performance.

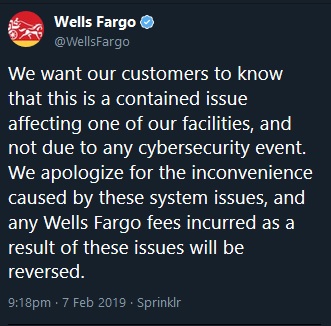

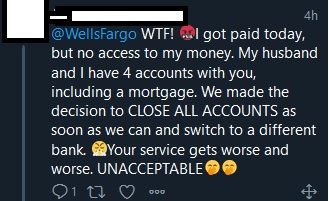

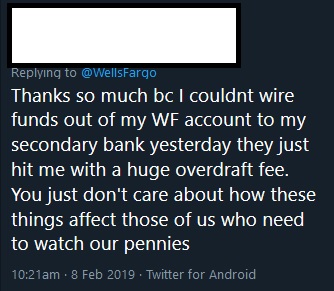

Availability goals should always be based in reality with real world data. How much does downtime cost the business – literally? What penalties – financial or otherwise – will be incurred? Does our solution mitigate those risks? It seems as if SWA either did not properly assess risk or worse, care. If it ain’t broke, don’t fix it, right? Wrong. According to this CNN report, SWA underinvested in its operations. Basic communication – including phone systems – were not working. Communication is crucial when the excrement hits the fan.

Andrew Watterson, SWA’s Chief Operating Officer, blamed the outdated scheduling software in a company call. The quotes from the call in the CNN article are telling.

I get that for large companies it’s hard to rip out existing systems, especially when you cannot tolerate much – if any – downtime. I spent the better part of the past 25+ years helping customers architect solutions (and will continue to do so at Pure) that perform well, are secure, and resilient/highly available. Choices have consequences and customers need to make the right ones especially when sunsetting older solutions that are very important. Always be looking forward.

How Do You Avoid Technical Debt?

I have worked with enough customers over the years to know that most people reading this blog have at least one legacy system hanging around. You know the one. It’s that system that if you look at it sideways, it acts up. That’s the one (or ones) you need a plan for sooner rather than later.

Being honest, tech debt is hard to avoid 100% of the time but you need to try. Be proactive, not reactive. Know when things like SQL Server, Windows Server, and other third party software are out of support. There are many nuances to dealing with technical debt which also includes ensuring that all staff has training and their skills are modernized. Technical debt is a people issue, too.

Know your core functionality and what you need to achieve. Getting lost in whiz-bang, fancy features and analytics does not mean a hill of beans if your company’s core goals are not met. In the case of SWA, they cannot move people from Point A to Point B. Let’s not even get into the potential hit to their reputation and bottom line that comes along with a failure of this magnitude.

Don’t become the next headline. Planning for obsolescence as soon as a system is brought online is really the exercise that needs to happen. If you do not bake obsolescence in as a feature from day one, you may be the next SWA or worse; events like this can take the business out permanently, too. Unemployment is not the goal.

What are your thoughts? Have you been in similar situations and if so, how did you get past the issue(s)?