Revisiting Storage Spaces Direct and SQL Server FCIs

Let me be up front here: I really do like Storage Spaces Direct (S2D). I’ve been talking about it a lot in presentations (including just recently at SQLBits – click here to see the video), and first mentioned it in more detail back in 2016 around a benchmark with SQL Server. It makes implementing failover cluster instances (FCIs) *so* much easier (especially under virtualization) and it adds a lot of IO scalability when done right. It’s a great architecture if you have the need. We’ve helped customers plan it, and if you need we can help you, too. Just contact us.

For those of you unfamilar with S2D, it’s a way to create storage for FCIs using storage that is local to the nodes themselves – not via SAN, iSCSI, etc. All of the official Microsoft documentation can be found here (no real mention of SQL Server), and if you want to know more, see my presentation linked above. I also teach S2D in some of my classes, where you may even get hands on experience with it.

That said, there are a few gotchas, some of which unfortunately affect SQL Server architectures – one of which could be a temporary showstopper for some, at least in its current form in Windows Server 2016. Let me explain.

Arguably, the biggest thing about S2D is that the solutions currently have to be certified (see this bit of documentation from MS for more detail). This obviously doesn’t really affect, say, virtualized versions or ones up in the public cloud such as in Azure in a meaningful way, but it’s still technically a requirement much like logoed hardware for Windows Server supportability. Anyone want to point me to the logo stamped on your VMs? Didn’t think so. Now, from a pure FCI perspective none of this is an issue. The way a Windows Server failover cluster (WSFC) is currently designed, it is expecting that all nodes participating in the WSFC are also using/needed S2D. Why am I mentioning this? Disaster recovery.

By its nature as needing shared storage, FCIs are more often than not a “local” HA solution and you add something else to it to make it business continuity friendly like an AG, log shipping, underlying storage-based replication which is transparent to the FCI, etc. For an FCI using traditional storage methods such as a SAN, none of this is a problem. It all just works and is supported just fine. Storage Replica in Windows Server 2016 (also Datacenter Edition like S2D) is also a great option for FCIs.

However, Storage Replica and Storage Spaces Direct cannot and do not work currently together in Windows Server 2016, and I am hoping they do in the future. SR cannot stretch an S2D WSFC right now. That means the D/R aspect of S2Ds with FCIs has to come in some other way. Here’s the Uservoice item for stretching an S2D WSFC to another site. It should be noted that as of the writing of this blog post, Windows Server 2019 was recently announced but nothing I’ve seen publcly points to SR and S2D working together in that upcoming release, nor would I bank on that.

Let’s add AGs to the party. In a traditional Windows Server-based AG, an instance participating as a replica can be standalone or an FCI. FCI + AG is a fully supported architecture from a pure WSFC and SQL Server standpoint right now. S2D complicates this, though. Why?

S2D WSFC configurations currently do not expect a non-S2D node. So if you have an FCI across n WSFC nodes with S2D for storage, and then want to extend that by an AG, it’s technically not an architecture that is currently supported in the traditional sense. Does it work? Yes, I’ve configured it. Will Microsoft give you best effort support if you’ve done this and run into an issue? Absolutely but at some point if it’s a serious issue, they could say “This isn’t a supported architecture, implement something supported.”

S2D across multiple sites is not an architecure that is currently supported, either. FCI + AG would be stretching the S2D WSFC, albeit in a non-traditional way. Since stretched S2D clusters are not supported anyway … it’s “complicated”. Yes, from a pure SQL Server point of view (and my perspective, quite frankly), the FCI + AG techically isn’t a stretched S2D WSFC, but in a way it is because of the mixing of S2D and non-S2D nodes in the same S2D WSFC. I can see both sides of the coin here.

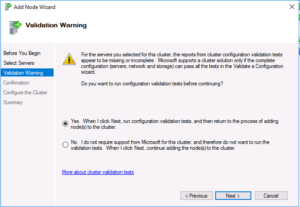

If you try to add a non-S2D node to a WSFC configured to use S2D for storage, here’s what you see during the validation process in Windows Server 2016:

How do you achieve D/R right now with S2D-based FCIs? Outside of platform-based methods for things like virtualization and IaaS, at the OS/SQL Server layer, log shipping is the easiest way. No clustered anything needs to be involved. SQL Server 2017 has the NONE-type AG which does not require any clustering whatsoever, but I’d avoid that for reasons I’ve discussed and talked about elsewhere. A Distributed AG would also technically work since it would span two different WSFCs; it is not a stretched single WSFC with S2D. Only the nodes participating in the S2D-based FCI would be in the same WSFC. Distributed AGs are an Enterprise Edition-only feature, which rules it out for many people.

Bottom line: right now: if you want to combine FCIs and AGs, the safest and currently the only fully supported way is to use traditional storage methods for your FCIs that are not S2D-based. I really hope that Microsoft officially supports the FCI + AG scenario where the FCI uses S2D in the near future. Stay tuned!